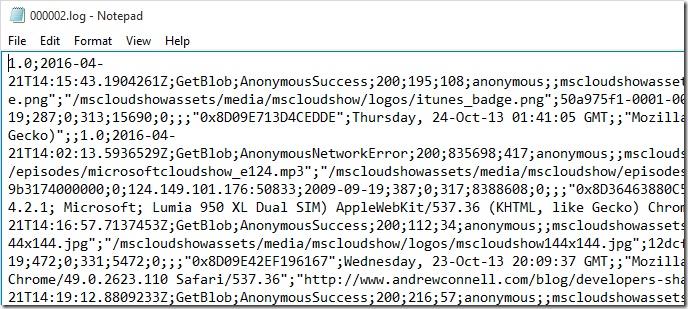

One of the ways AC and I track how many people are listening to the Microsoft Cloud Show episodes we put out is by using the logs created for Azure Storage. These track the various requests for the mp3 files for each episode. You have to turn on this logging for your account and once this is done then log files are written into the /$logs/blob folder of your storage account. You can read more about Azure Storage Analytics here: https://msdn.microsoft.com/en-us/library/hh343270.aspx

However, the way the storage logs are filed does not exactly make analyzing them easy in something like Excel. They are logged in /year/month/day/hour/5min chunk folders/files in CSV format. (detail of what is logged is here).

Until recently our process for downloading the analyzing these was rather laborious and included:

- Download all the logs files.

- Use some PowerShell to squash all the thousands of CSV files into one big one.

- Use SQL Import tool to take the CSV and import each line into a temp table in SQL Azure

- Run a T-SQL script over the data to remove all the rows we were not interested in (we only wanted the logs for downloaded MP3s, not other assets etc.…)

- Mutate the data in various columns for better reporting. e.g. pulling important parts from the User Agent string and pulling an episode number out of the filenames.

- Finally inserting all the new rows into the final reporting Table

- Using Excel to report over the data

It was error prone, painfully slow at times and required all these things to be done each time we wanted new statistics. At times we would go months without doing this and were flying blind on how many people were listening. Not really very good.

What we really needed was an automated process!

Azure Function Apps to the rescue!

In March 2016 Microsoft announced Azure Functions. They are lightweight bits of code that can be run in Azure when certain events occur, like on a timed basis, when an HTTP request is made and when a blob is added to storage. What is nice about them is that you are not paying for a Virtual Machine to sit there mostly idle. You only pay for what you use. You can write them in a variety of languages and trigger them in various ways. If you know how to write a console app in C# they are super easy to understand, same with a hello world app in Node.JS.

They looked perfect for what I wanted to do. I wanted to read in log files as they were created, parse the content out of them & them log that to an Azure SQL Database. From there we just attach Excel to the DB and go to town with graphs and pivot tables etc.…

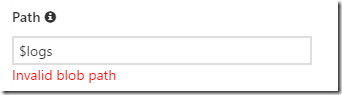

Microsoft.Azure.WebJobs.Host: Invalid container name: $logs.

Problem! Currently it looks like you can’t trigger a Function off blob creation of a log file in the $logs container which is where the files are dropped 🙁 I don’t know if this is something that will change, but ill try and find out.

This means that for now we would have to do one of two things:

- Manually copy log files to another container periodically. Ok solution.

- Make another function that is triggered every 15 mins or so to copy the log files out to another container which would trigger or other function. Better solution.

I opted for copying the blobs on a schedule. I wrote some pretty crude code that:

- Enumerates all the blobs in the source $logs container

- Checks if they exist in the destination container

- Copies them over if they don’t

I say this is crude because it doesn’t keep track of the last blob that it copied and therefore each time it runs it enumerates all the logs each time. This isn’t ideal and I will probably need to make it a bit more sophisticated in the future. But for now running it once a day shouldn’t be a problem.

Update: Since I published the post I updated the copy code to check to see if it needs to sync files since the last time the function ran + 1 extra day (to be safe). This means it wont scan all your logs every time.

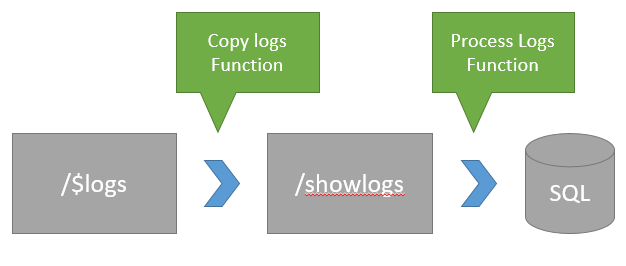

So here is what we have end to end …

- Copy logs from $logs in one subscription to /showlogs in another subscription

- Process each log file as it arrives and put the data in the Database

Show me the code!

Ok so how did I do this. Below is the two Azure Functions. I have tried to comment them so you can follow along.

Copying log files from /$logs to another Azure Blob container.

Next, Processing those log files when they arrive.

I have put all the source for these two functions in a GitHub repo here: https://github.com/LoungeFlyZ/AzureBlobLogProcessing

There are two files for each function:

- project.json – contains some dependency information for libraries that I used in each function

- run.csx – the main azure function code

Hopefully someone else will find this useful!

Azure Functions provide a really handy and simple way to run code periodically. If you are familiar with Node.JS, C#, Python or PHP you should go take a look at them.

-CJ

I’m not a developer but a business intelligence guy. Did you consider PowerBI for this task? It can read azure files, combine and filter them, create derived calculations and auto refresh without a single line of code. And you can connect to them for Excel as well if you want.

Hi,

great stuff. having some trouble though.

I get the following exception: CsvHelper: The conversion cannot be performed.

I’ve merged both scripts into one so that the whole code is launched with Timer Trigger.

I’m passing the blob value as follows:

…

CloudBlockBlob blob = new CloudBlockBlob(item.Uri);

using (TextReader sr = new StringReader(blob.Name))

…

Have i messed up sth?

Hi

I used your solution and have written up a post: https://davemateer.com/azure/2018/11/07/Azure-Functions-to-Count-Downloads-from-Blob-Storage.html

My version uses v2 of Azure Functions, and is otherwise very similar.

Thanks again

Dave