Anyone who has listen to me rant on about how interesting Docker is on the Microsoft Cloud Show may have caught me talking about Tutum.

The short story on Tutum is that it provides an easy to use management application over Virtual Machines that you want to run your apps on with Docker. It is (sort of) cloud provider agnostic in that it supports Amazon Web Services, Microsoft Azure and Digital Ocean among others.

The short story on Tutum is that it provides an easy to use management application over Virtual Machines that you want to run your apps on with Docker. It is (sort of) cloud provider agnostic in that it supports Amazon Web Services, Microsoft Azure and Digital Ocean among others.

It was bought by Docker late last year and recently was recently re-released as Docker Cloud.

What does it provide?

At a high level you still pay for your VMs wherever you host them, but Docker Cloud provides you management of them for 2c an hour (after your first free node) no matter how big or small they are. You write your code, package it in a Docker Image as per usual and then use Docker Cloud to deploy containers based on those docker images to your Docker nodes. You can do this manually or have it triggered when you push your image to somewhere like Docker Hub as part of a continuous integration set up.

Once you have deployed your app (“Services” in Docker Cloud terminology) you can use it to monitor them, scale them, check logs, redeploy a newer version or turn them off etc… They provide an easy to use Web App, REST APIs and a Command Line Interface (CLI).

So how easy is it really?

Getting going …

The first thing you have to do is connect to your cloud provider like Azure. For Azure this means downloading a certificate from Docker Cloud and uploading it into your Azure subscription. This lets Docker Cloud use the Azure APIs to manage things in your subscription for you. (details here)

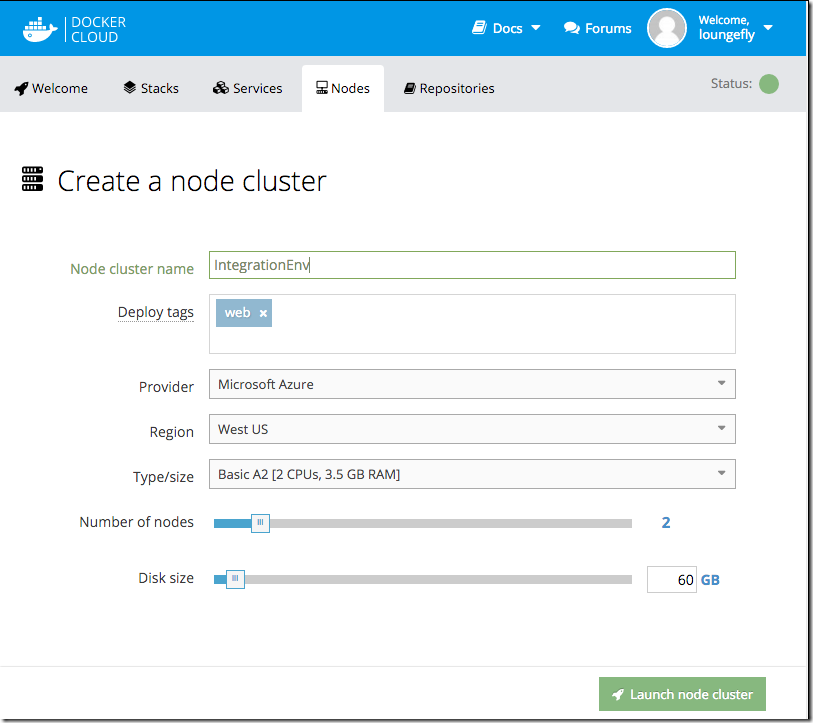

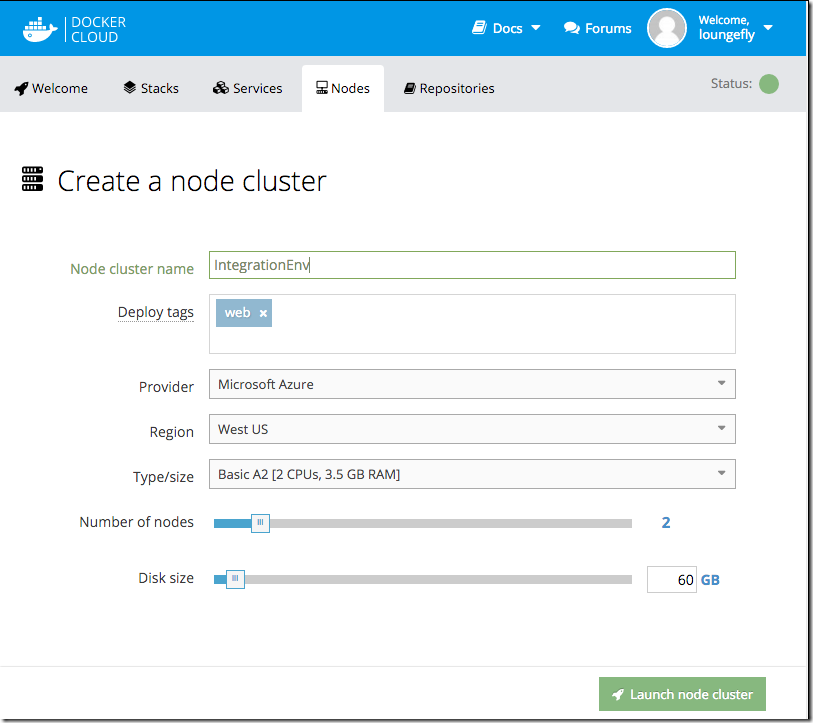

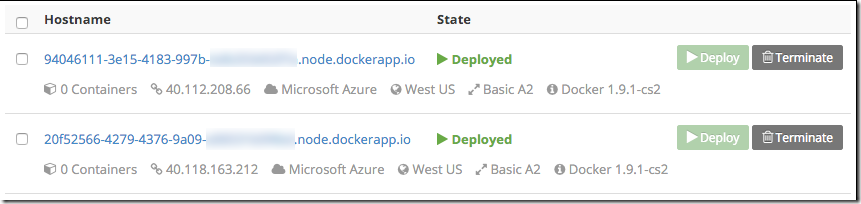

Once you have done that you can start deploying Virtual Machines, “Nodes” in Docker Cloud terminology. Below I’m creating a 2 node cluster of A2 size in the West US region of Azure.

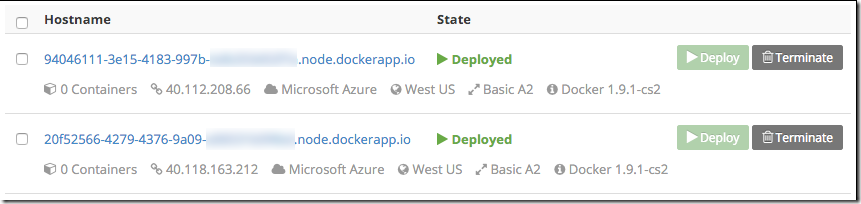

That’s it. Click “Launch node cluster” wait a few mins (ok quite a few) and you have a functional Docker cluster up and running in Azure.

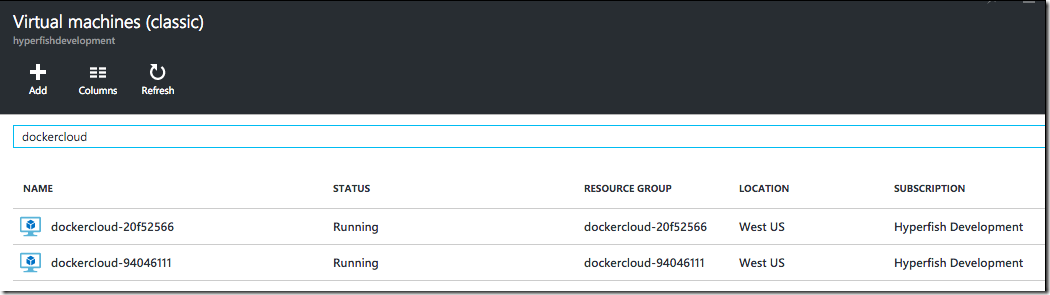

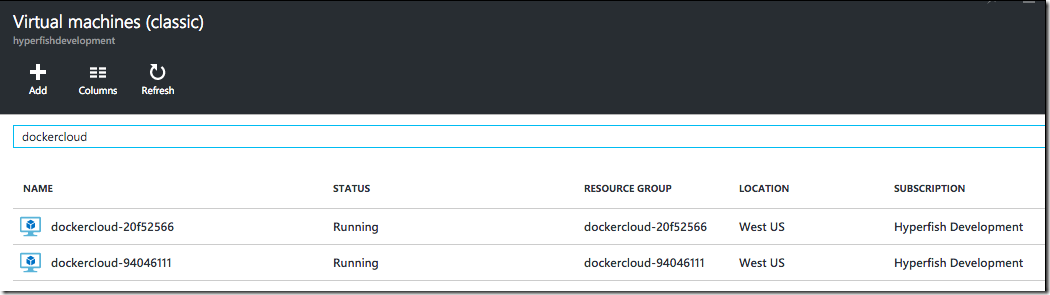

In Azure you can take a look at what Docker Cloud has created for you. Note that as of the time of writing that Docker Cloud is provisioning “Classic” style VMs in Azure and not using the newer ARM model. They also deploy different VMs into their own cloud services and resource groups which isn’t good for production. That said, Docker Cloud let you Bring Your Own Node (BYON) which lets you provision the VMs however you like, install the Docker Cloud agent on them and then register them in Docker Cloud. Using this you can deploy your VMs using ARM in Azure and configure them however you like.

Deploy stuff …

Now you have a node or two ready you can start deploying your apps to them! Before you do this you obviously need to write your app … or use something simple like a pre-canned demo Docker Image to test things out.

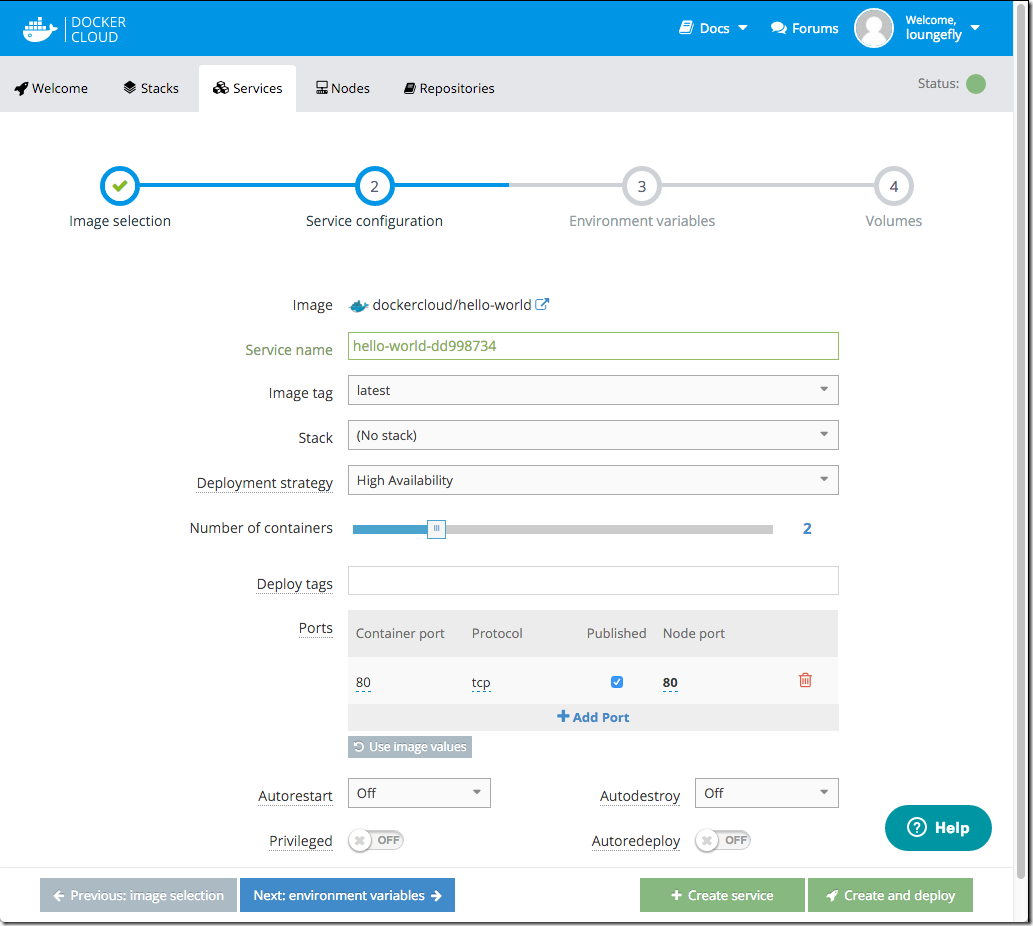

Docker Cloud makes this really simple through “Services”. You create a new service, tell it where it should pull the Docker Image from and a few other configuration options like Ports to map etc… Then Create and your containers will be deployed to your nodes.

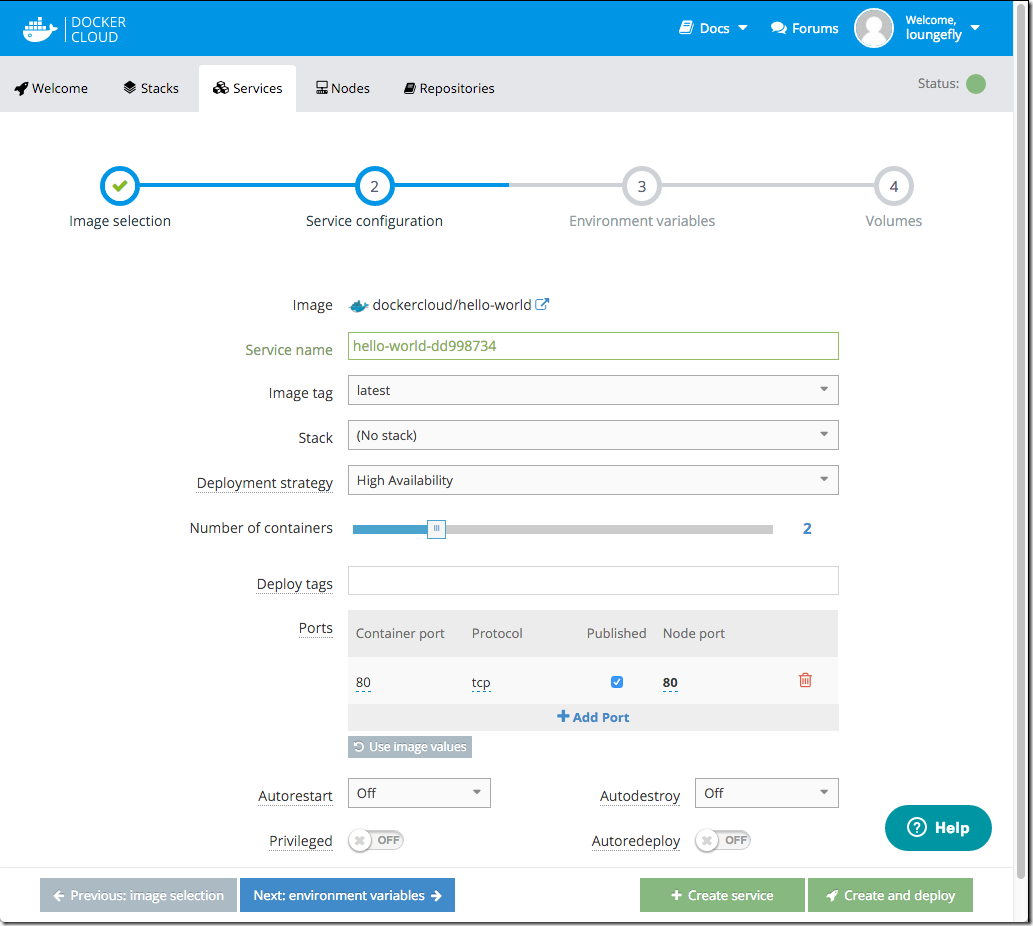

Try this once you have your nodes up and running. Click Services in the top nav, then Create Service. Under Jumpstarts & Miscellaneous category you should see the “dockercloud/hello-world” image. Select it and then set it up like this:

There are only a two things I changed from the default setup.

- I moved the slider to 2 in order to deploy 2 containers

- Mapped Port 80 of the container to Port 80 of the node and clicked Published. This maps port 80 of the VM to port 80 of the container running on it so that we can hit it with a web browser.

- High availability in the deployment strategy. This will ensure that the containers are spread across available nodes vs. both on one.

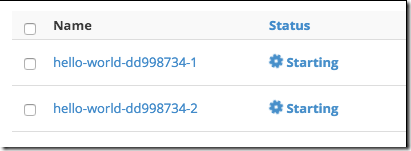

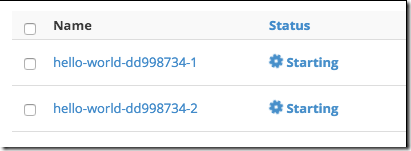

Click “Create and deploy” and you should see your containers starting up. Pretty simple huh!

Note: There is obviously a lot more available via configuration for things like environment variables and volume management for data that you will eventually need to learn about as you develop and deploy apps using Docker.

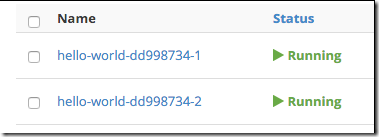

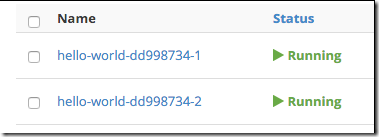

Once your containers are deployed you will see them move to the running state:

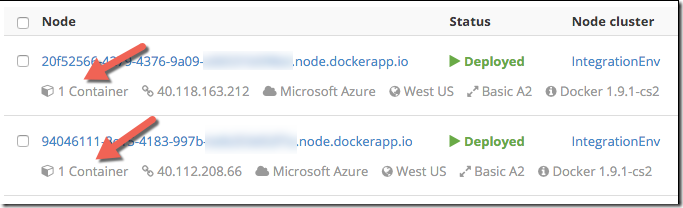

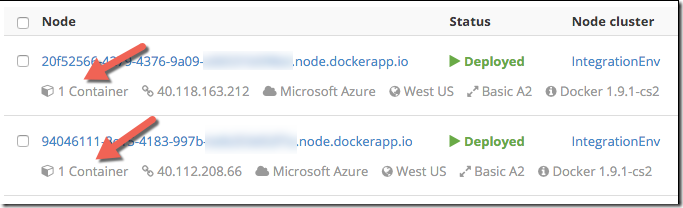

Now you have two hello world containers running on your nodes. If you go back to your list of Nodes you should see 1 container running on each:

I want to see the good man!

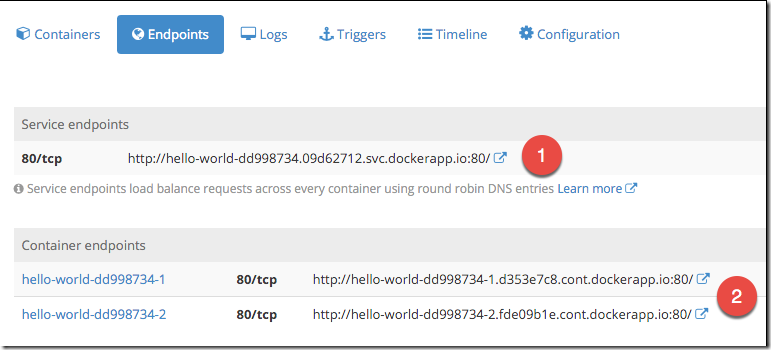

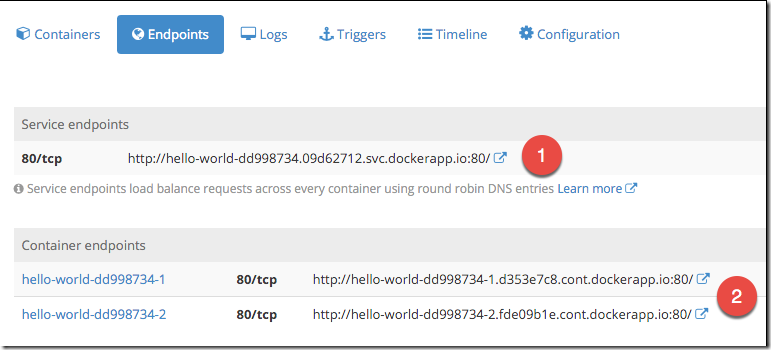

You can test your hello world app out by hitting its endpoint. You can find out what that is under the Service you created in the Endpoints information.

- This is the service endpoint. It will use DNS round robin to direct requests between your two running containers.

- These are the individual endpoints for each container. You can hit each one independently.

Try it out! Open the URL provided in a browser and you should see something like this:

Note that #1 will indicate what container you are hitting.

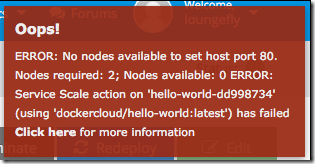

Want more containers? Go into your Service and move the slider and hit apply.

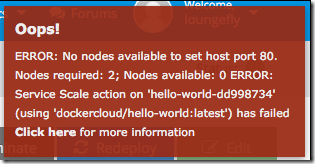

You will get an error like this:

This is because we mapped port 80 of the Node to the Container and you can only do that mapping once per Node/VM. i.e. two containers cant both be listening on port 80. So unless you use a HAProxy or similar to load balance your containers you will be limited to one container on each node mapped to port 80. I might write up another post about how to do this better using HAProxy.

Automate all the things …

We are a small company and we want to automate things as much as possible to reduce the manual effort required for mundane tasks. We have opted to use Docker Cloud for helping us deploy containers to Azure as part of our continuous integration and continuous deployment pipeline.

In a nutshell when a developer commits code it goes through the following pipeline and automatically is deployed to our staging environment:

- Code is committed to GitHub

- Travis-CI.com is notified and it pulls the code and builds it. Once built it creates a Docker Image and pushes it to Docker Hub.

- Docker Cloud is triggered by a new image. It picks it up and redeploys that Service using the new version of the image.

This way a few minutes after a developers commits code the app has been built and deployed seamlessly into Azure for us. We have a Big Dev Ops Flashing Thing hanging on the wall telling us how the build is going.

Cool … what else …

At Hyperfish we have been using Tutum for a while during its preview period with what I think is great success. Sure, there have been issues during the preview, but on the whole I think it has saved us a TON of time and effort setting up and configuring docker environments. Hell I am a developer kinda guy, not much of a infrastructure one and I managed to get it working easily which I think is really saying something 🙂

Is this how you will run production?

Not 100% sure to be honest. It is certainly a fantastic tool that helps you run your apps easily and quickly. But there is a nagging sensation in the back of my head that it is yet another service dependency that will have its share of downtime and issues and that might complicate things. But I guess you could say that about any additional bit of technology you introduce and take a dependency on. That said, traffic to and from your apps is not going through Docker Cloud, traffic goes direct to your nodes in Azure so if they have brief downtime your app should continue to run just fine.

I have said that for the size we currently are and with the team focusing on building product that we might consider something else only once we can do a better job that it does for us.

We might consider something else only once we can do a better job that it does for us.

All in all I think Docker Cloud has a lot of great things to offer. It will be interesting to compare and contrast these with the likes of Azure’s new service, Azure Container Service (ACS) as it matures and approaches General Availability. It’s definitely something we will look at also.

-CJ